The Evolution of the Microcontroller: A Journey Through the Development History of MCU

Introduction

The microcontroller unit (MCU) stands as one of the most transformative and ubiquitous technologies of the modern digital age. Embedded in everything from household appliances and automotive systems to sophisticated medical devices and industrial robots, these tiny, self-contained computers silently power our world. The development history of the MCU is a fascinating tale of miniaturization, integration, and relentless innovation, driven by the demands of efficiency, cost, and functionality. From its humble beginnings as a simple processor with minimal support to today’s powerful systems-on-chip (SoCs), the MCU’s evolution mirrors the broader trajectory of computing itself. This article delves into the key phases of this journey, exploring how technological breakthroughs and market needs shaped the intelligent silicon hearts that now number in the billions around the globe.

The Genesis: Birth and Early Foundations (1970s)

The story of the microcontroller begins in the early 1970s, a period dominated by large, expensive microprocessors that required numerous external chips—memory, input/output ports, timers—to form a functional system. This complexity was impractical for cost-sensitive and space-constrained embedded control applications.

The pivotal moment arrived in 1971 when Texas Instruments engineers Gary Boone and Michael Cochran created the TMS 1000, widely recognized as the world’s first microcontroller. It integrated a 4-bit CPU, read-only memory (ROM), random-access memory (RAM), and clock circuitry on a single chip. While primitive by today’s standards, it established the fundamental paradigm: a complete computer on one piece of silicon designed for dedicated control tasks.

Shortly after, in 1976, Intel released the 8048, following its earlier 4004 microprocessor. The Intel 8048 became a landmark design, cementing the MCU’s architecture. It combined an 8-bit CPU, 1KB of EPROM, 64 bytes of RAM, and I/O ports in a 40-pin package. Its success proved the commercial viability of single-chip control solutions for keyboards, early automotive functions, and appliance control. This era was defined by 4-bit and 8-bit architectures, limited memory, and development processes that were often arduous, relying on assembly language and specialized hardware programmers. These early chips laid the indispensable groundwork for all subsequent advancements.

The Era of Expansion and Standardization (1980s-1990s)

The 1980s and 1990s witnessed explosive growth and diversification in the MCU market. This period was characterized by architectural wars, the rise of powerful development tools, and the establishment of families that would become industry standards.

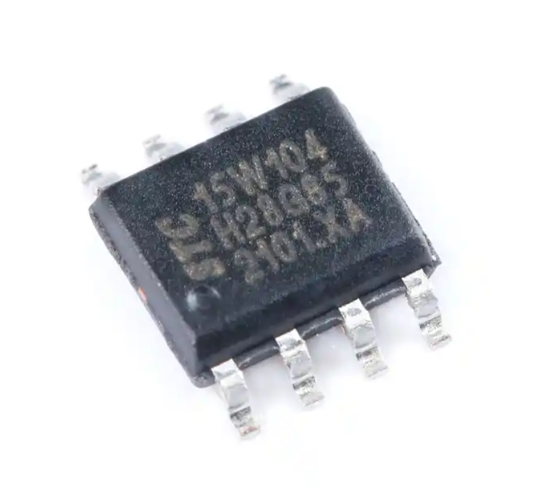

A monumental leap occurred in 1980 with Intel’s introduction of the 8051. Its enhanced Harvard architecture (separate program and data buses), rich instruction set, multiple timers, serial port (UART), and extensive interrupt structure made it phenomenally successful. The 8051’s core was licensed to numerous semiconductor manufacturers, creating a vast ecosystem of compatible parts that thrives to this day. It became the definitive 8-bit MCU platform.

Simultaneously, Motorola (now NXP) championed its 6800 and later the highly popular 68HC11 series, known for robustness and on-chip peripherals like analog-to-digital converters (ADCs). This competition fueled rapid innovation in peripheral integration—ADCs, PWM controllers, serial communication interfaces (I2C, SPI) became common inclusions.

The late 1980s saw the emergence of Reduced Instruction Set Computer (RISC) architectures in the MCU space. Companies like Microchip Technology revolutionized the market with their PIC series, offering low-cost, high-performance RISC-based MCUs with a small footprint. This era also saw Atmel’s introduction of the AVR architecture in the mid-1990s, famous for its efficient single-clock-per-instruction execution. Crucially, development moved away from pure assembly language with the advent of high-level language compilers (like C) and more accessible in-circuit emulators and debuggers, dramatically lowering the barrier to entry for engineers.

The Modern Age: Connectivity, Power Efficiency, and SoC Integration (2000s-Present)

The new millennium propelled MCUs into an era defined by connectivity, ultra-low power consumption, and staggering levels of integration. The driving forces were the Internet of Things (IoT), portable battery-powered devices, and demands for smarter, more autonomous systems.

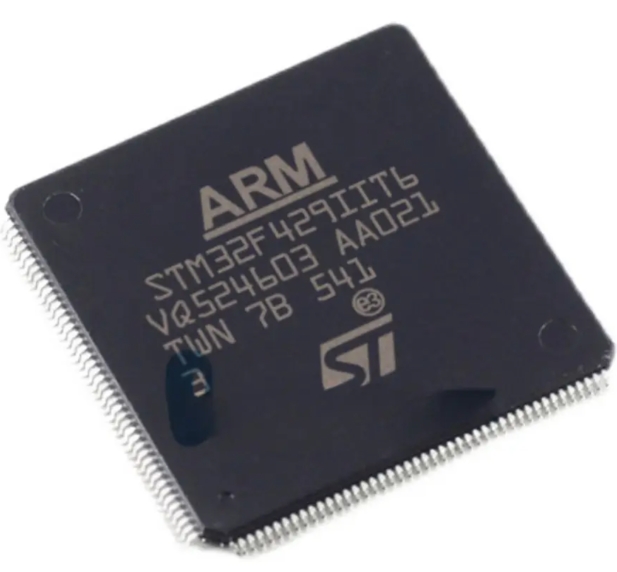

A major trend has been the dominance of 32-bit ARM Cortex-M cores. ARM Holdings’ business model of licensing core designs allowed semiconductor companies like STMicroelectronics (STM32), NXP (LPC, Kinetis), and Texas Instruments to create powerful, standardized, yet highly differentiated MCU families. The Cortex-M series offered scalable performance from simple M0+ cores to feature-rich M4 and M7 cores with DSP and floating-point capabilities.

Ultra-low-power (ULP) design became a critical battleground. Modern MCUs feature sophisticated power management units (PMUs), multiple low-power sleep modes (deep sleep, standby), and dynamic voltage and frequency scaling (DVFS) to enable devices that can run for years on a single coin-cell battery.

Furthermore, connectivity has been integrated directly onto the MCU die. It is now commonplace to find chips with built-in radios for Wi-Fi, Bluetooth Low Energy (BLE), Zigbee, or even cellular NB-IoT alongside traditional peripherals. This transforms an MCU from a simple controller into a connected edge node.

The line between MCUs and microprocessors (MPUs) has blurred with the rise of Microcontroller-based Systems-on-Chip (MCU-SoCs). These devices integrate not just digital peripherals but also advanced analog components, security accelerators (for AES, SHA), dedicated graphics processors, and even AI/ML accelerators for tinyML applications. For professionals navigating this complex landscape to source such advanced components or find obsolete parts for legacy systems, platforms like ICGOODFIND provide invaluable services by connecting buyers with a global network of verified suppliers.

Conclusion

The development history of the MCU is a remarkable chronicle of technological convergence. From its inception as a minimalist integrated controller to its current state as a powerful, connected, and intelligent SoC platform, the MCU has continually evolved to meet the challenges of each new generation of electronic products. Each phase—from integration of core components in the 1970s to peripheral expansion in the late 20th century and today’s focus on connectivity and intelligence—has expanded the horizons of what embedded design can achieve. As we look toward a future filled with increasingly autonomous and interconnected devices at the edge of networks, the microcontroller will undoubtedly continue its evolution, becoming ever more capable, efficient, and secure. Its history is not just about silicon; it is about enabling innovation across every facet of modern life.