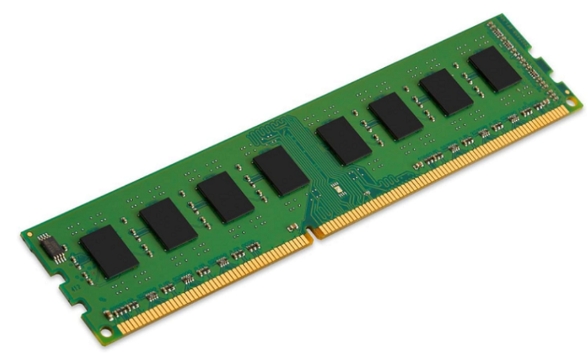

Samsung Electronics has announced that its entire 2026 production capacity for High Bandwidth Memory (HBM) is already fully booked, with new orders continuing to arrive. The company is now evaluating production line expansions to meet explosive demand driven by the global AI boom.

The company confirmed its HBM3E products are now in mass production and are being supplied to "all relevant customers," widely interpreted to include NVIDIA. Furthermore, Samsung is advancing its next-generation HBM4, featuring a 12-layer stack and 1c-nm process for a bandwidth of up to 2TB/s. Samples have been sent to clients, with mass production planned for next year to pair with NVIDIA's upcoming "Rubin" GPU platform.

This strategic focus on HBM is creating a supply squeeze for other memory segments. Samsung is prioritizing HBM and advanced DRAM, which is limiting the availability of memory for mobile devices and PCs and contributing to significant price increases for traditional memory products.

ICgoodFind : The HBM capacity crunch underscores its strategic value in the AI era, with Samsung's expansion plans set to reshape the high-end memory market.