AI company Anthropic is significantly expanding its partnership with Google, planning an investment of tens of billions of dollars to secure up to one million AI chips for training its next-generation Claude models.

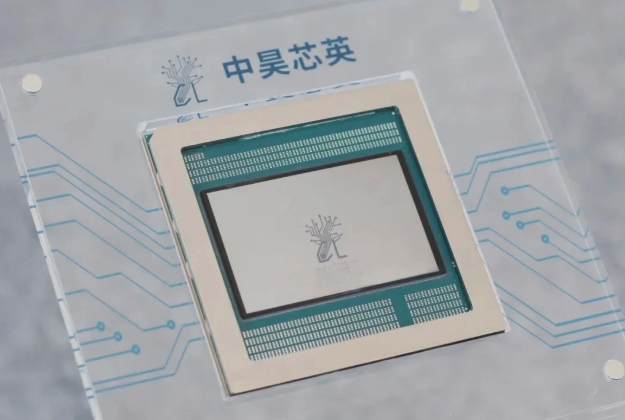

Under the agreement, Anthropic will gain access to over one gigawatt of computing power, scheduled to come online in 2026. This capacity will be built entirely on Google's custom Tensor Processing Units (TPUs), a rare allocation of Google's internal hardware. Anthropic cited the cost-effectiveness and efficiency of TPUs, along with its extensive experience using them to train and run Claude models, as key reasons for the choice.

The deal also includes additional Google Cloud services, positioning TPUs as a key alternative to NVIDIA's chips. The massive investment is fueled by Anthropic's rapid growth, driven by enterprise product adoption, with its projected annualized revenue run rate expected to more than double by 2026.

This transaction highlights the intense demand for computing power in the AI industry. For context, Anthropic's competitor OpenAI has secured deals for roughly 26 gigawatts of computing, a endeavor potentially costing over one trillion dollars.

ICgoodFind : This deep partnership underscores the strategic value of AI chips like TPUs and signals a continued escalation in the compute arms race.